What Is the AI Safety Index and Who's Setting the Standards?

AI policy analyst researching AI governance and regulatory frameworks.

AI policy analyst researching AI governance and regulatory frameworks.

The AI Safety Index is a comprehensive tool designed to assess the safety practices of leading artificial intelligence (AI) companies. Developed by the Future of Life Institute, it aims to provide a structured evaluation of how well these companies manage risks associated with their AI technologies. This index evaluates various dimensions of AI safety, including risk assessment, governance, and transparency, ultimately serving as a benchmark for responsible AI development.

The primary purpose of the AI Safety Index is to enhance accountability within the AI industry. By publicly grading companies, it seeks to promote a culture of safety and responsibility in AI development. The goals include:

The Future of Life Institute (FLI) is a nonprofit organization focused on mitigating existential risks posed by powerful technologies, particularly AI. FLI plays a pivotal role in the AI Safety Index by assembling a panel of independent experts who evaluate the safety practices of AI companies. Their commitment to promoting safe AI development is evident in their ongoing research and advocacy initiatives.

The AI Safety Index is graded by a panel of seven independent reviewers, consisting of prominent figures in AI research and ethics, including:

This diverse panel ensures that the evaluations are comprehensive and informed by a wide range of expertise.

FLI collaborates with various regulatory bodies to align the AI Safety Index with emerging standards for AI safety. This collaboration enhances the credibility of the index and ensures that it reflects the latest developments in AI governance.

The AI Safety Index evaluates companies across six key categories:

The scoring system uses a letter grade format (A to F) based on the performance in each category. For instance, a company may receive an "A" for excellent risk assessment practices but a "D" for transparency, leading to a composite score that reflects its overall safety posture.

The inaugural AI Safety Index report revealed concerning grades for major AI companies:

These grades highlight the urgent need for improved safety measures across the industry.

The report identified several weaknesses prevalent among AI companies:

Safety metrics are crucial for guiding AI development, ensuring that technologies are not only innovative but also reliable and secure. They provide benchmarks for companies to evaluate their safety practices against industry standards.

Inadequate safety standards can lead to significant real-world consequences, including:

Given the rapid advancement of AI technologies, there is a compelling case for regulatory oversight. A structured approach to AI governance, akin to what exists in the pharmaceutical industry, could help ensure that AI technologies are developed responsibly.

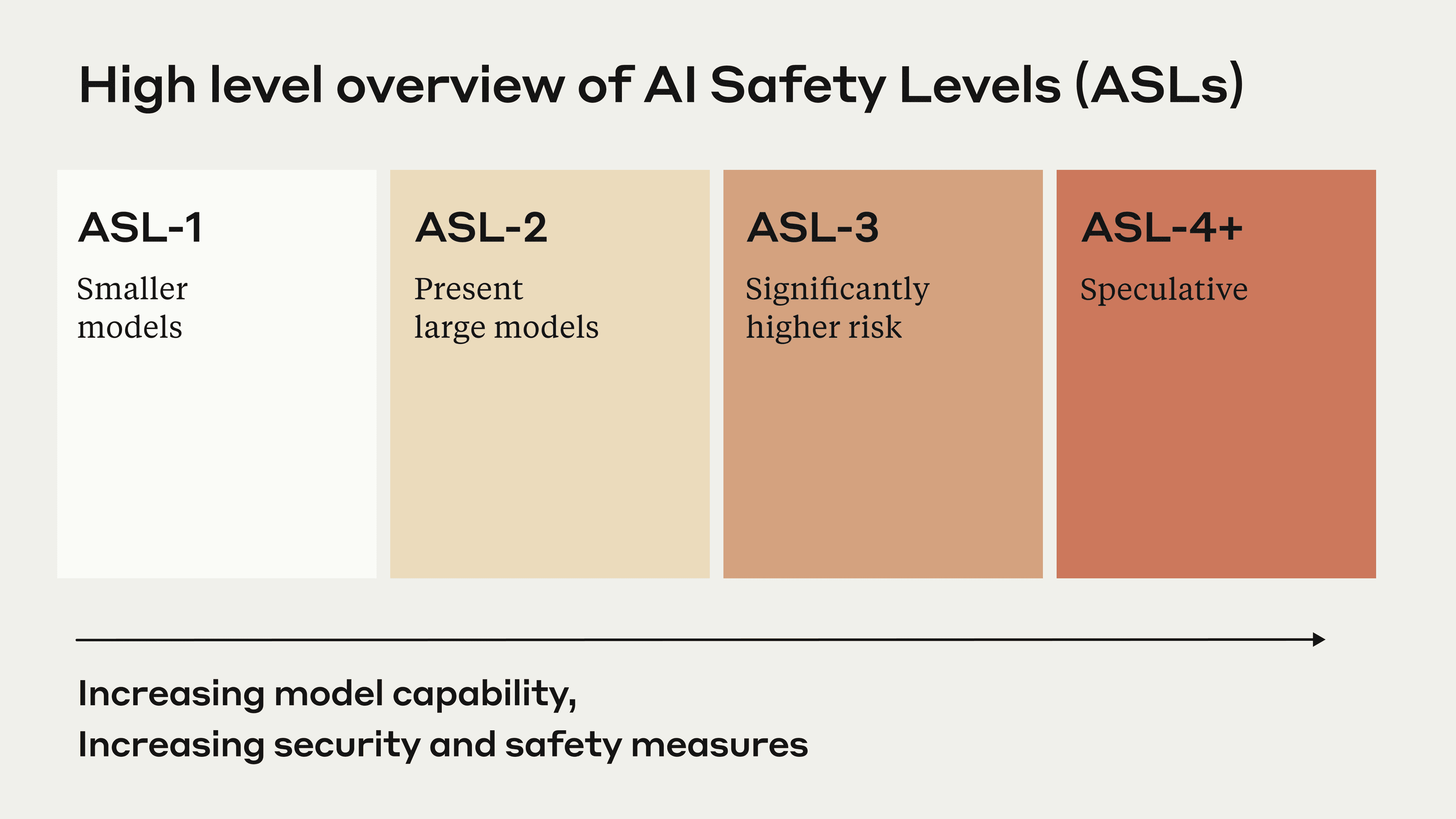

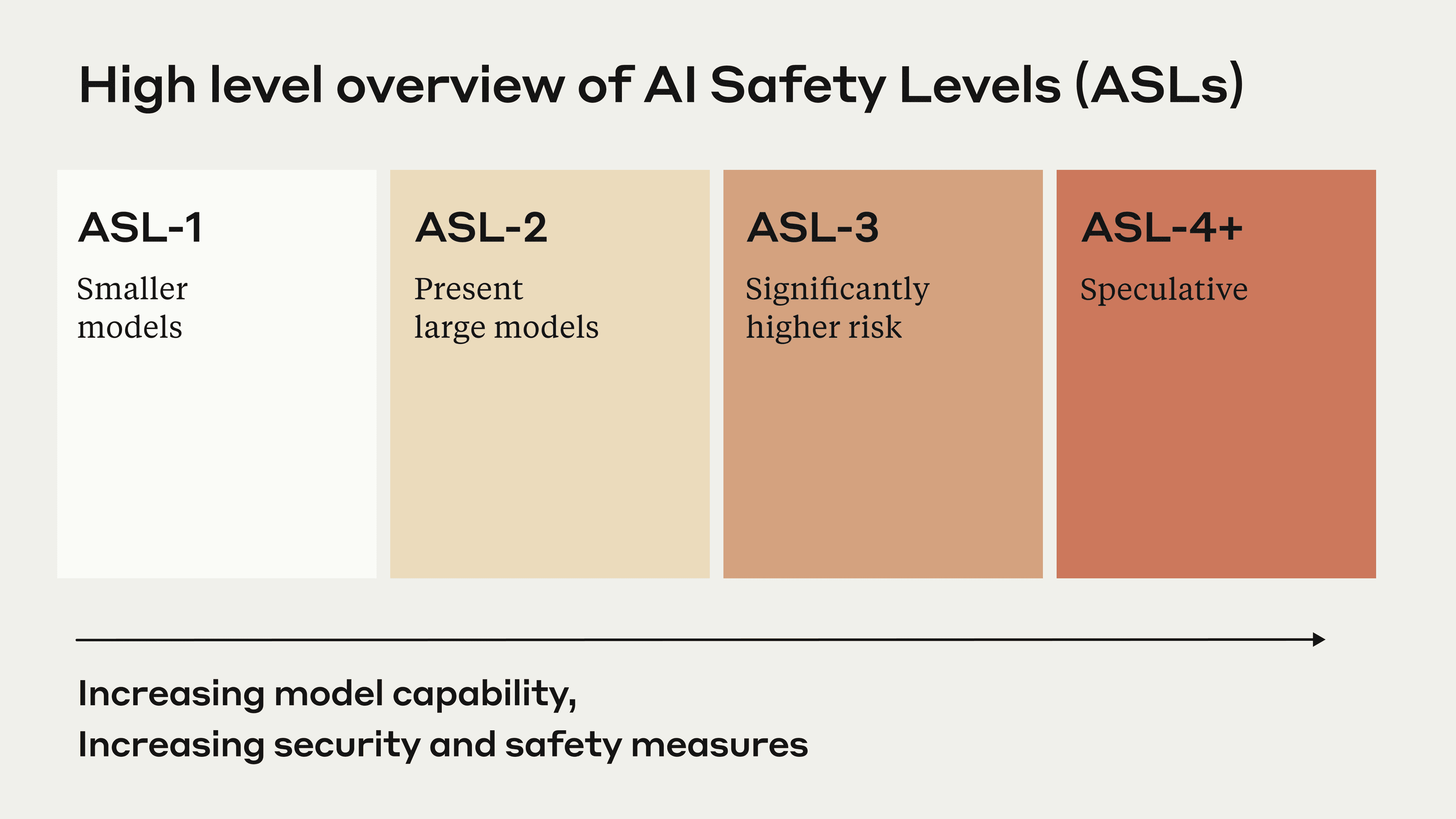

Two prominent AI safety frameworks include:

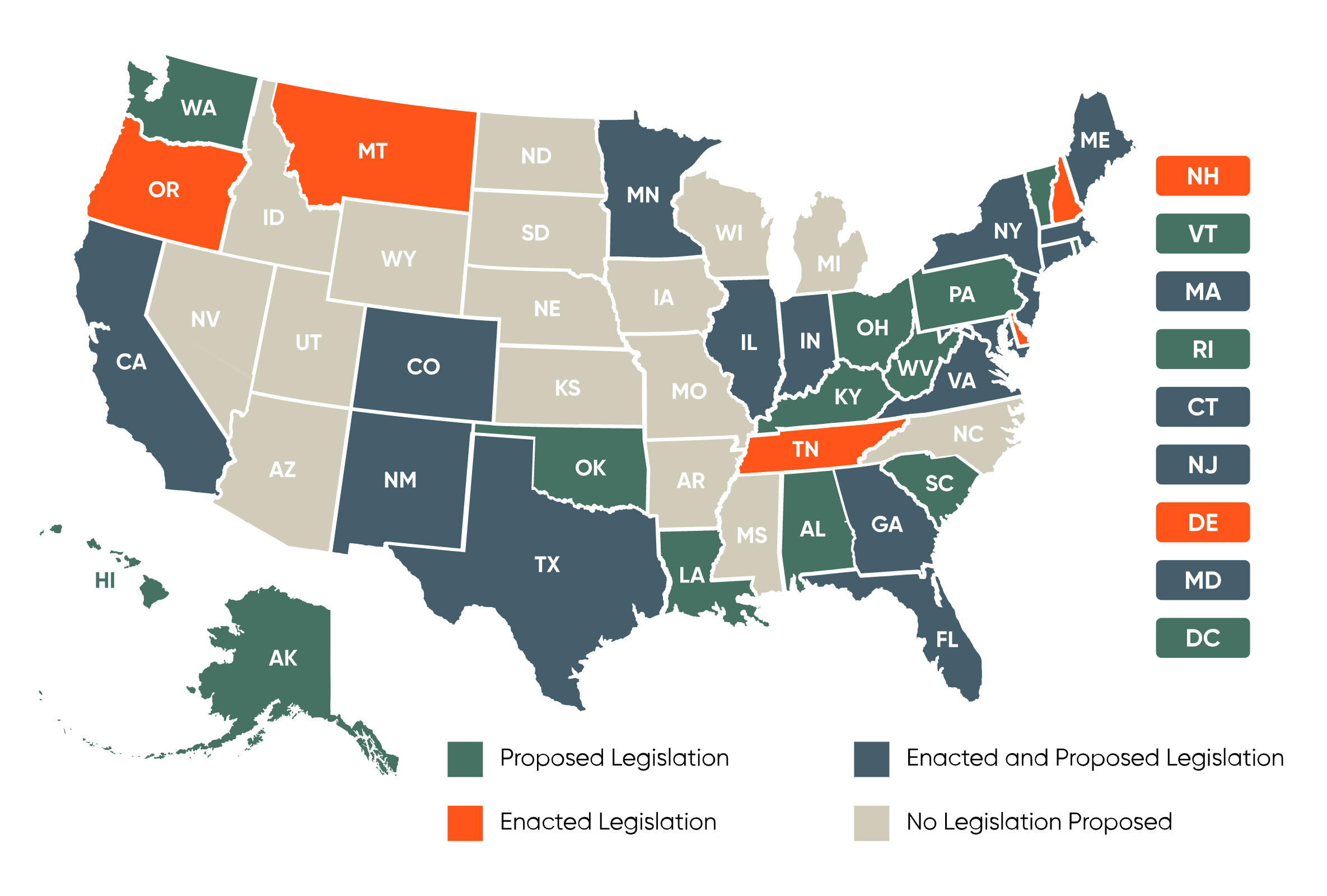

Regulatory approaches differ significantly across regions:

The AI Safety Index underscores the importance of learning from industry practices. Companies that adopt best practices in safety and transparency are more likely to foster public trust and mitigate risks.

Future iterations of the AI Safety Index may include expanded criteria for evaluation, ensuring that it remains relevant as AI technologies evolve. Incorporating feedback from industry stakeholders will also enhance its effectiveness.

Stakeholders, including tech companies, regulators, and civil society, must collaborate to strengthen AI safety. Open dialogue and shared responsibility will be essential for developing effective regulatory frameworks.

The long-term vision for AI safety metrics involves establishing universal standards that can guide responsible AI development globally. This would require international cooperation and commitment to ethical principles in AI.

The AI Safety Index provides a crucial framework for evaluating the safety practices of leading AI companies. The findings reveal significant gaps in safety measures, emphasizing the need for improved accountability and transparency in the industry.

As AI technologies continue to advance, establishing robust safety standards will be paramount. Collaboration among stakeholders, alongside effective regulatory measures, will help ensure that AI develops in a manner that prioritizes public safety and ethical considerations.

Key Takeaways:

— in AI in Business

— in AI in Business

— in AI in Business

— in Autonomous Vehicles

— in AI in Business